Joint membership inference for machine learning models

This is the gentle introduction to our work, which will be presented at NDSS 2026. Code and datasets are available on Github.

Introduction

Membership inference attacks (MIAs) have become a standard way to evaluate the empirical privacy risks of machine learning models. The first modern MIA was introduced in 2017

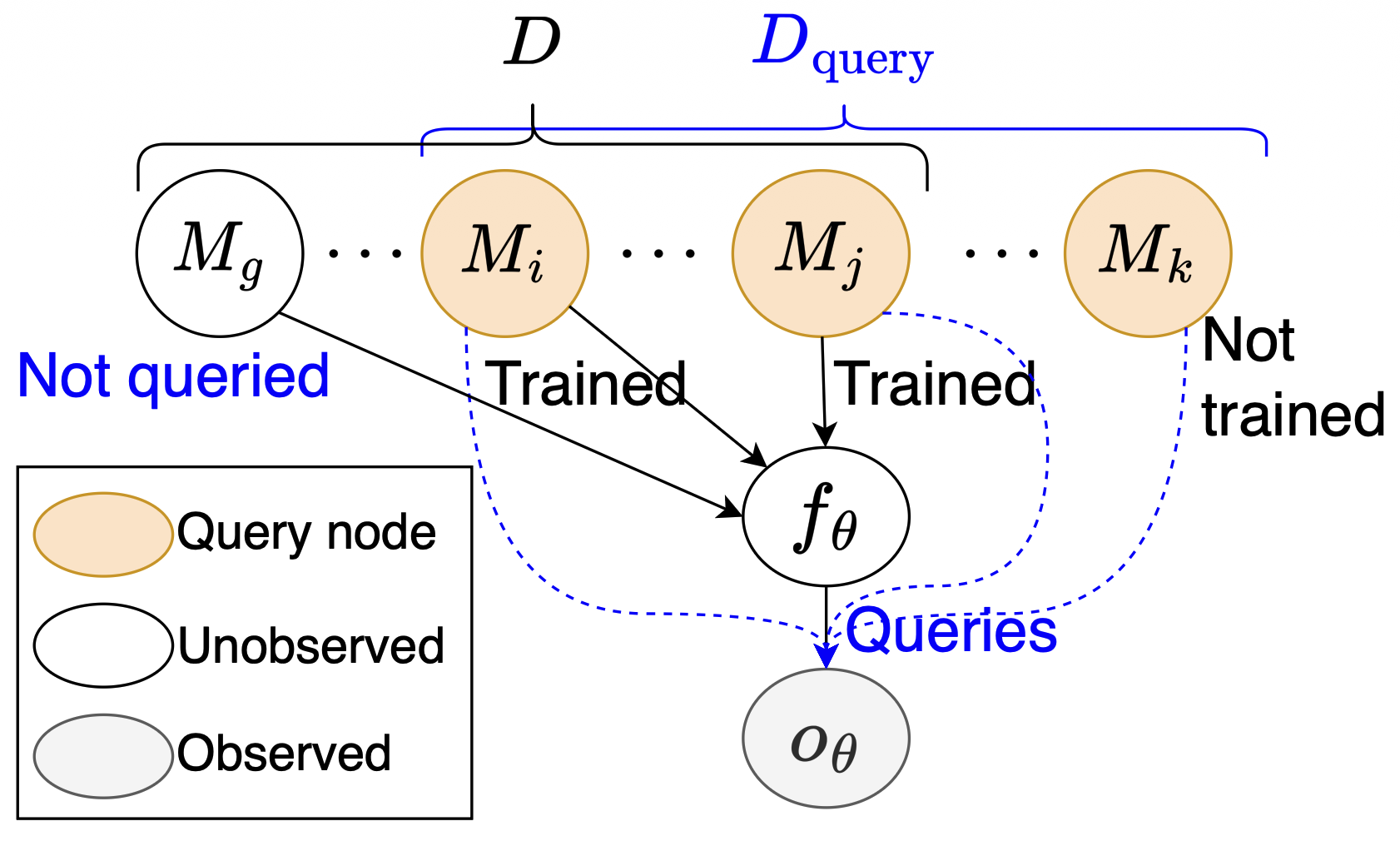

All existing MIAs follow a one-by-one procedure: for each membership query instance, the adversary collects an attack signal (from black-box queries or white-box gradients) and outputs a membership prediction for that single instance. At first glance, this approach appears reasonable, as the data instances in the training set could be assumed to be independently and identically distributed (i.i.d.), implying that the membership indicators Mi and Mj are marginally independent. However, this independence assumption is violated when conditioning on the model’s output over the entire memberhsip query dataset. In this conditional setting, the membership indicators, although independent marginally, become dependent due to the collider effect induced by the model’s output, as shown below.

This intriguing observation sparked a key question for us: can we design a more powerful MIA by exploiting this inherent dependence between instances?

A new formulation for MIA

To achieve this, we first re-examined the standard security game for MIAs. We found that the existing security game

In the adaptive setting, the adversary receives the full query set and is then allowed to train new shadow models. This enables the attacker to leverage information about both in distribution (member) and out distribution (non-member) behaviors specific to the query set.

It’s worth noting that some studies

Cascading Membership Inference Attack (CMIA)

Our first attack, the Cascading Membership Inference Attack (CMIA), is an attack-agnostic framework designed to exploit this membership dependence for a more powerful attack. However, capturing this dependence is not easy. By definition, the attacker doesn’t know the true membership of any query instance to begin with. We borrow the intuition behind Gibbs sampling: estimate a joint distribution from marginal distributions by iterative conditional updates. In our setting, an existing MIA provides approximate marginal membership probabilities for each instance. CMIA then iteratively refines membership predictions by conditioning on increasingly confident labels. Concretely, each cascading iteration works as follows:

- Run the base MIA to obtain marginal membership scores for all query instances.

- Fix the most confident predictions as pseudo membership labels.

- Train conditional shadow models using those pseudo-labels to implicitly capture joint behaviors.

- Repeat the process.

CMIA can plug into any shadow-based MIA to boost its performance. Unlike full Gibbs sampling (which may require many iterations to converge to the joint distribution), CMIA tends to reach strong performance within just a few cascading steps.

Proxy Membership Inference Attack (PMIA)

CMIA is powerful but relies on the adaptive setting, since it trains shadow models after seeing the query set. That makes it inapplicable in the non-adaptive setting, where the attacker cannot retrain on the queries.

To address non-adaptive scenarios, we formalize the problem as Marginal MIA and derive the theoretically optimal attack strategy. Interestingly, we found that this theoretically optimal attack, which is based on a likelihood ratio test, provides a theoretical justification for why MIAs should focus on achieving a high True Positive Rate (TPR) at a very low False Positive Rate (FPR).

But how do we approximate the in behavior for a query instance if we are not allowed to train shadow models on that instance? We propose a pragmatic solution: use proxy instances.

An adversary can train shadow models before observing the query set; those shadow models have in behaviors for whatever data they trained on. By finding training examples (from the adversary’s available data) that are similar to a query instance, we can use those examples’ behaviors as proxies for the query instance’s in distribution. We design proxy-selection strategies at three granularities (global, class, and instance-level) and use them as proxies to construct the likelihood ratio test for attack. Despite the simplicity of this idea, PMIA performs surprisingly well and often outperforms existing non-adaptive methods by a substantial margin.

Conclusion: A new frontier for MIA

Our work opens a new avenue for improving membership inference by explicitly modeling dependencies between instances. We have only scratched the surface, yet the initial gains are significant. I believe there is substantial follow-up work to be done, and that exploring joint inference will reshape how MIAs (and their defenses) are designed.

However, this increased power doesn’t come for free. CMIA, for instance, works by iteratively running the base attack. If the base attack is already computationally expensive (like LiRA